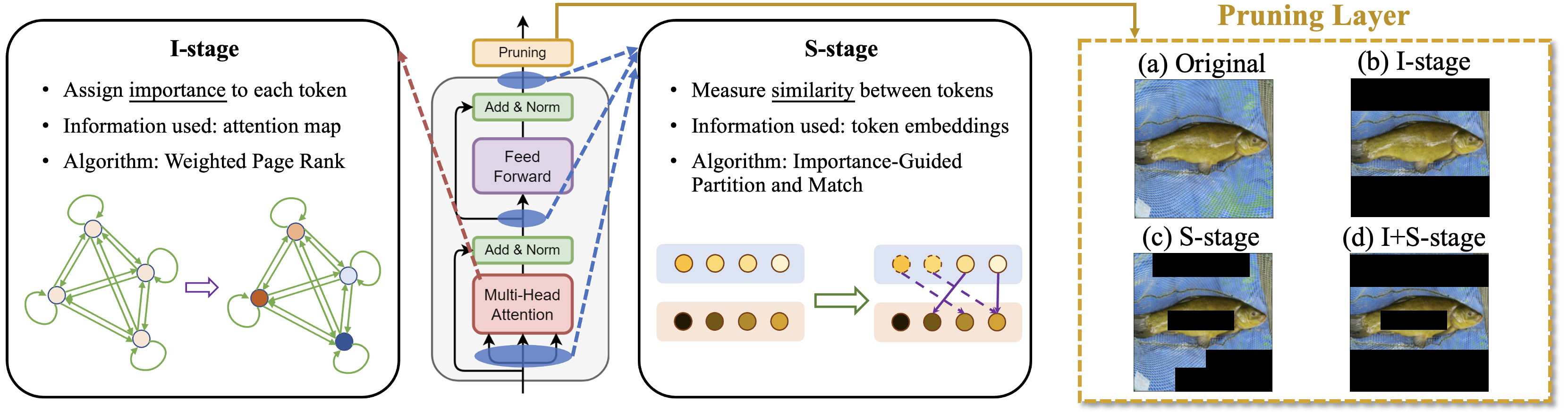

Visualized examples of the pruning process conducted by Zero-TPrune. Images are randomly selected from ImageNet validation dataset. When the pruning rate is aggressive and the main object occupies most of the image area, it is not enough to only prune background tokens. Zero-TPrune exploits similarity between main object tokens and prunes redundant ones.

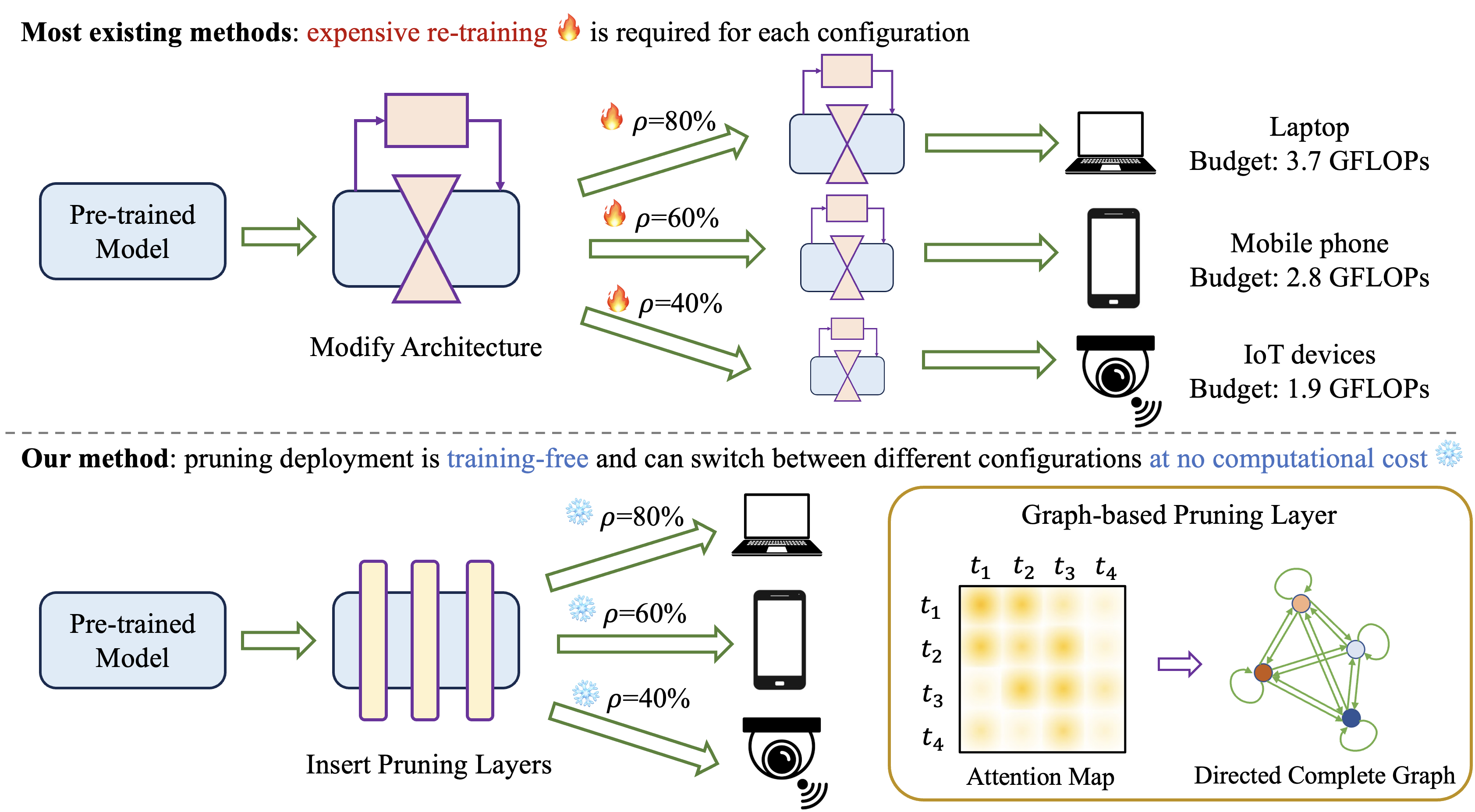

Deployment of Transformer models on edge devices is becoming increasingly challenging due to the exponentially growing inference cost that scales quadratically with the number of tokens in the input sequence. Toke pruning is an emerging solution to address this challenge due to its ease of deployment on various Transformer backbones. However, most token pruning methods require computationally expensive fine-tuning, which is undesirable in many edge deployment cases.

In this work, we propose Zero-TPrune, the first zero-shot method that considers both the importance and similarity of tokens in performing token pruning. It leverages the attention graph of pre-trained Transformer models to produce an importance distribution for tokens via our proposed Weighted Page Rank (WPR) algorithm. This distribution further guides token partitioning for efficient similarity-based pruning.

Due to the elimination of the fine-tuning overhead, Zero-TPrune can prune large models at negligible computational cost, switch between different pruning configurations at no computational cost, and perform hyperparameter tuning efficiently.

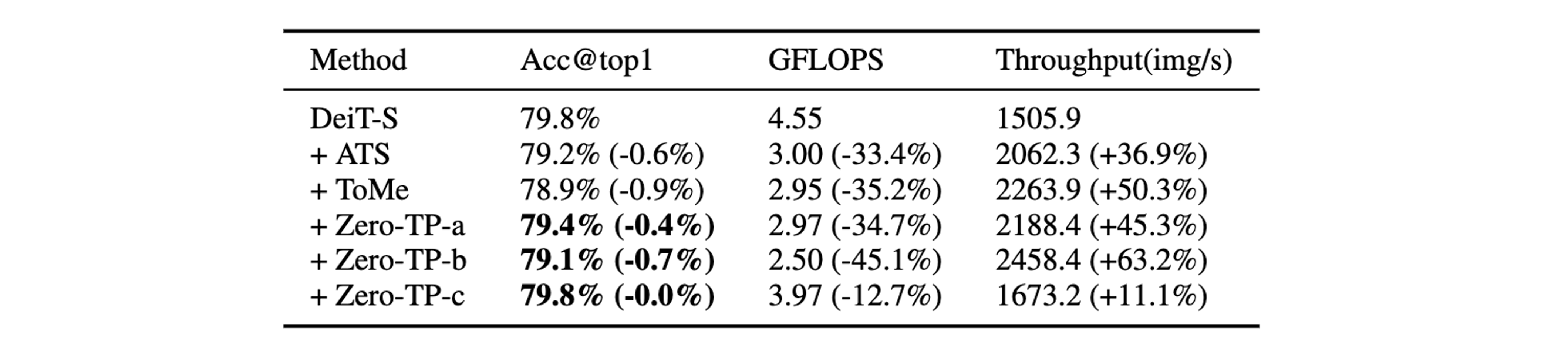

We evaluate the performance of Zero-TPrune on vision tasks by applying it to various vision Transformer backbones and testing them on ImageNet. Without any fine-tuning, Zero-TPrune reduces the FLOPs cost of DeiT-S by 34.7% and improves its throughput by 45.3% with only 0.4% accuracy loss. Compared with state-of-the-art pruning methods that require fine-tuning, Zero-TPrune not only eliminates the need for fine-tuning after pruning but also does so with only 0.1% accuracy loss. Compared with state-of-the-art fine-tuning-free pruning methods, Zero-TPrune reduces accuracy loss by up to 49% with the same or higher throughput.

The overall Zero-TPrune framework. Pruning layers can be inserted between Transformer blocks to reduce the number of tokens. Pruning layers comprise I-stage and S-stage: I-stage aims at pruning unimportant tokens of an image, such as background tokens (see (b)); S-stage aims at pruning tokens that are too similar to others, such as repetitive texture tokens (see (c)). A combination of the stages then maximally exploits token redundancy (see (d)).

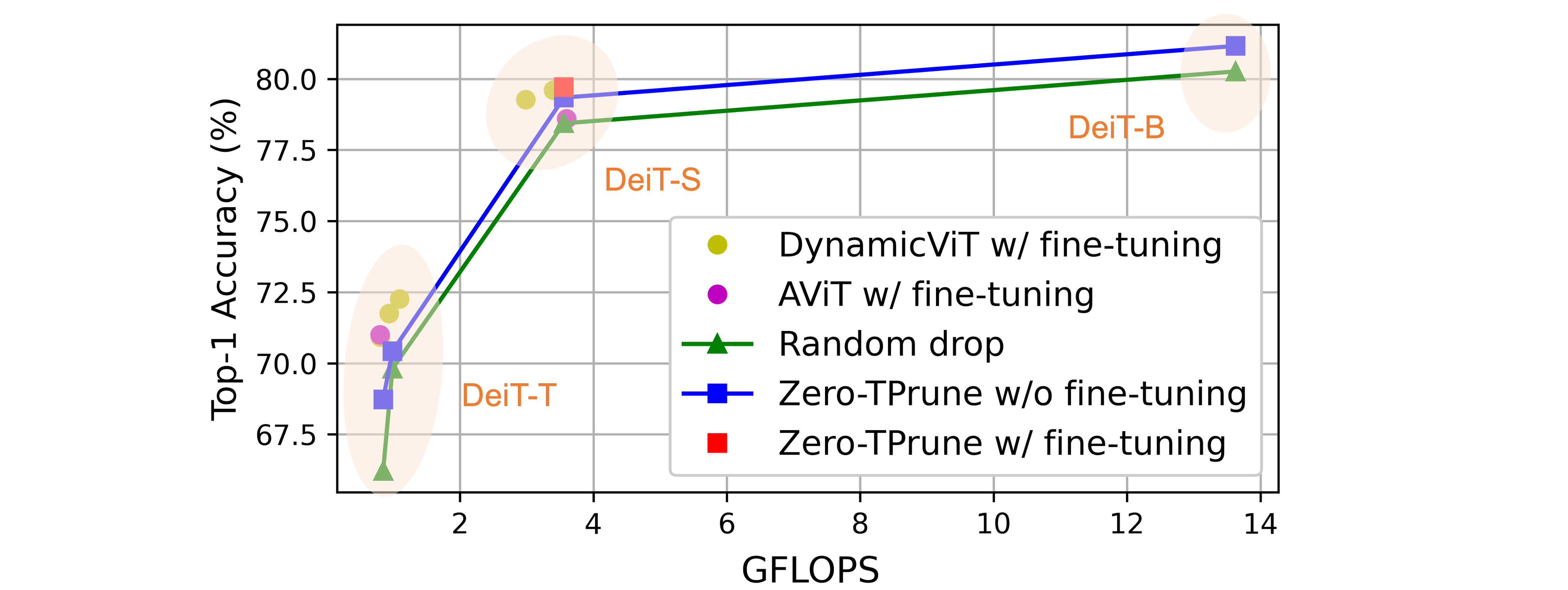

We report the top-1 accuracy, parameters, FLOPs and throughput of the pruned models on ImageNet. We evaluate the models on 224px images unless otherwise noted.

Performance comparison between Zero-TPrune and state-of-the-art fine-tuning-required methods. The performance of DynamicViT and A-ViT w/o fine-tuning equals the performance of randomly dropping tokens. Zero-TPrune can be easily applied to larger models (e.g., given a 13.6 GFLOPS budget on DeiT-B) for higher accuracy. On the contrary, applying DynamicViT and A-ViT to large models is very computationally expensive due to their expensive fine-tuning process.

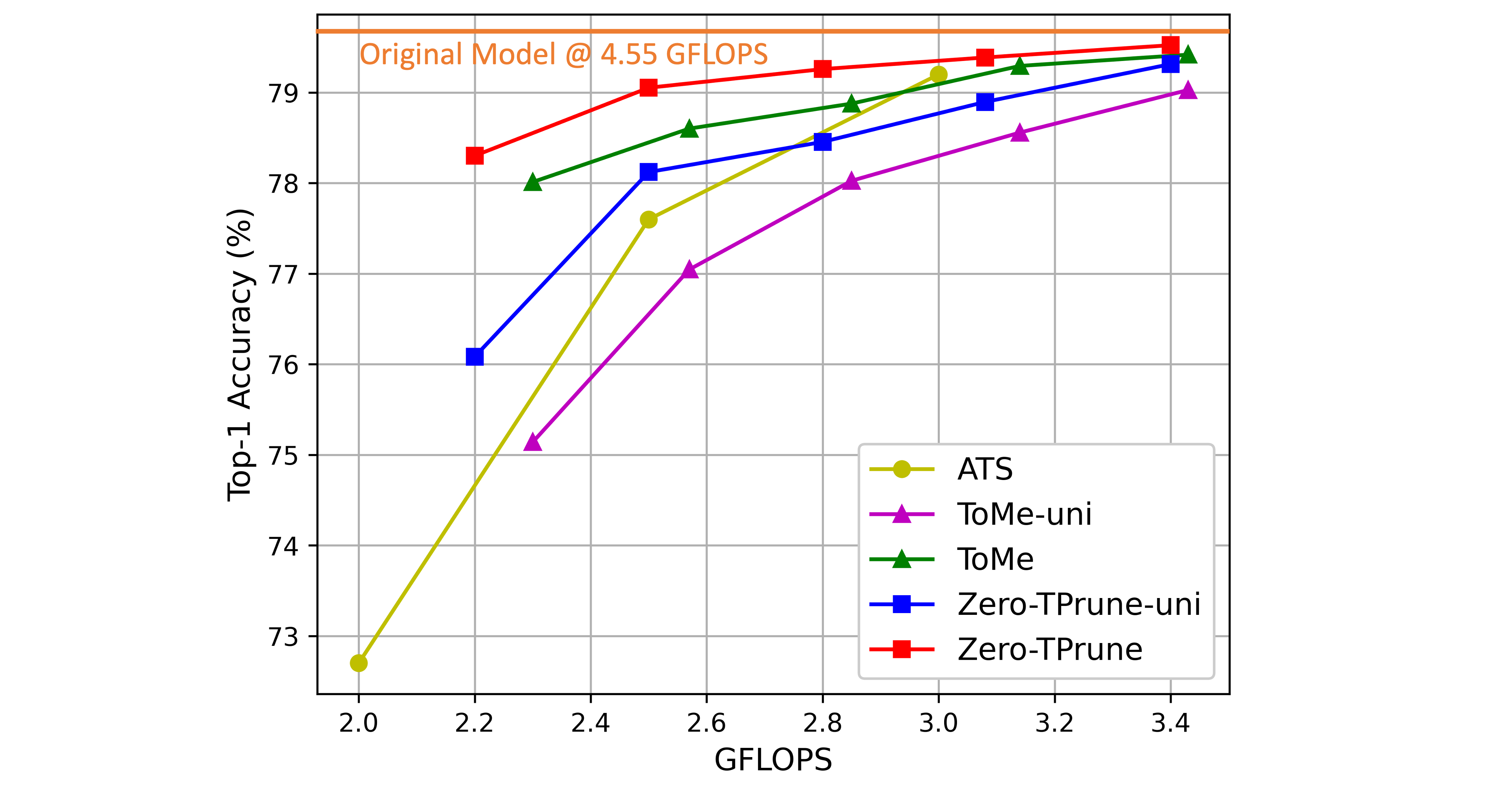

Comparison between Zero-TPrune and state-of-the-art fine-tuning-free methods. The backbone model is DeiT-S.

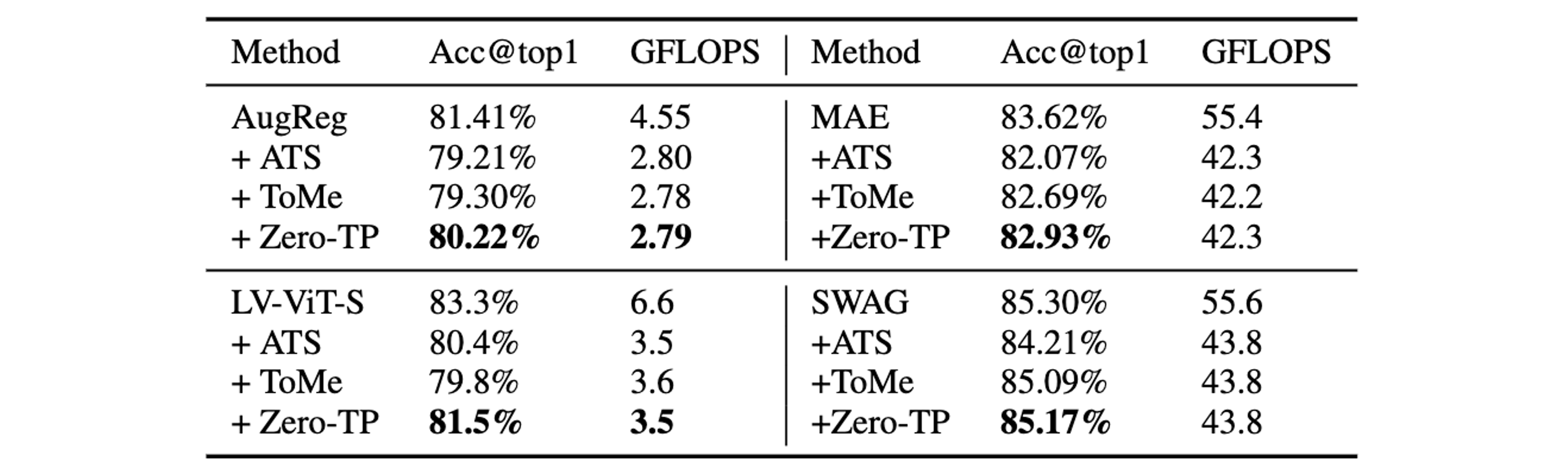

Comparison between Zero-TPrune and state-of-the-art fine-tuning-free methods on various backbones. SWAG models perform inference on 384px images.

@article{wang2023zero,

title={Zero-TPrune: Zero-Shot Token Pruning through Leveraging of the Attention Graph in Pre-Trained Transformers},

author={Wang, Hongjie and Dedhia, Bhishma and Jha, Niraj K.},

journal={arXiv preprint arXiv:2305.17328},

year={2023}

}